Nov 22 2019

Duke University engineers have created a microscope with the ability to adapts its colors, patterns, and lighting angles, as well as to train itself on the optimal settings required to execute a specified diagnostic task.

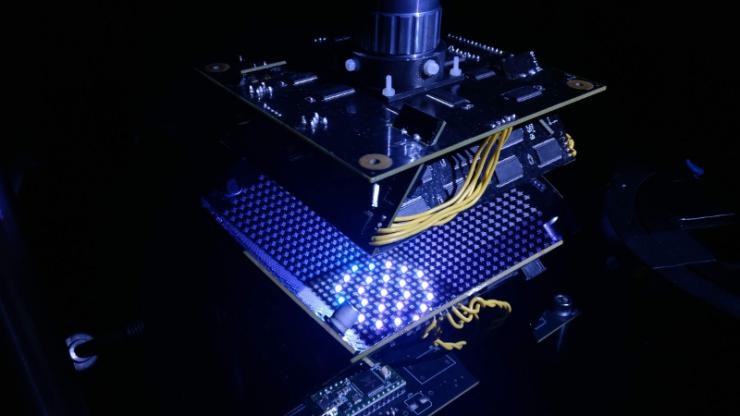

Duke Engineers have developed a new type of microscope that uses a bowl studded with LED lights of various colors and lighting schemes produced by machine learning. Image Credit: Duke University.

Duke Engineers have developed a new type of microscope that uses a bowl studded with LED lights of various colors and lighting schemes produced by machine learning. Image Credit: Duke University.

During the initial proof-of-principle research, the microscope simultaneously created a lighting pattern and classification system that enabled it to rapidly detect red blood cells infected by the malaria parasite more precisely when compared to trained physicians and other machine learning methods.

The study outcomes have been published online in the November 19th issue of the Biomedical Optics Express journal.

“A standard microscope illuminates a sample with the same amount of light coming from all directions, and that lighting has been optimized for human eyes over hundreds of years,” said Roarke Horstmeyer, assistant professor of biomedical engineering at Duke.

But computers can see things humans can’t. So not only have we redesigned the hardware to provide a diverse range of lighting options, we’ve allowed the microscope to optimize the illumination for itself.

Roarke Horstmeyer, Assistant Professor of Biomedical Engineering, Duke University

Instead of diffusing white light from below for uniform illumination of the slide, the engineers created a bowl-shaped light source in which LEDs were embedded throughout the surface. This enables the samples to be illuminated from various angles of up to almost 90° with various colors, which typically casts shadows and underscores numerous features of the sample based on the pattern of LEDs used.

Then, the researchers fed the microscope with numerous samples of malaria-infected red blood cells formed as thin smears, where the cell bodies stay intact and are preferably distributed in a single layer on a microscope slide.

The microscope used a kind of machine learning algorithm known as a convolutional neural network to learn the features of the sample that were highly important for diagnosing malaria and how best to underscore those features.

Ultimately, the algorithm landed on a ring-shaped LED pattern of various colors emitted from comparatively high angles. Although the ensuing images are noisier compared to a regular microscope image, they underscore the malaria parasite in a bright spot and are accurately classified nearly 90% of the time. Other machine learning algorithms and trained physicians usually perform with an accuracy of about 75%.

The patterns it’s picking out are ring-like with different colors that are non-uniform and are not necessarily obvious. Even though the images are dimmer and noisier than what a clinician would create, the algorithm is saying it’ll live with the noise, it just really wants to get the parasite highlighted to help it make a diagnosis.

Roarke Horstmeyer, Assistant Professor of Biomedical Engineering, Duke University

Then, Horstmeyer sent the sorting algorithm and the LED pattern to another collaborator’s lab in another part of the globe to verify whether the results could be translated to different microscope configurations. The other laboratory demonstrated similar successes.

“Physicians have to look through a thousand cells to find a single malaria parasite,” noted Horstmeyer. “And because they have to zoom in so closely, they can only look at maybe a dozen at a time, and so reading a slide takes about 10 minutes. If they only had to look at a handful of cells that our microscope has already picked out in a matter of seconds, it would greatly speed up the process.”

The scientists also demonstrated that the microscope works well with thick blood smear formulations, where the red blood cells form an extremely non-uniform background and may be broken apart. The machine learning algorithm was successful 99% of the time for this formulation.

Horstmeyer stated that the enhanced accuracy is predicted since the examined thick smears were more heavily stained compared to the thin smears and displayed higher contrast. However, they also take more time to prepare, and part of the impetus for the project is to reduce the diagnosis times in low-resource settings in which trained physicians are sparse and bottlenecks are the norm.

After the initial success, Horstmeyer has continued the efforts to advance the microscope, as well as machine learning algorithm.

A team of engineering graduate students from Duke University has formed a startup company SafineAI to develop the miniature form of the reconfigurable LED microscope concept, which was already awarded a prize of $120,000 at a local pitch competition.

At the same time, Horstmeyer has been involved in a different machine learning algorithm to develop a version of the microscope that can adapt its LED pattern to any particular slide it attempts to read.

We’re basically trying to impart some brains into the image acquisition process. We want the microscope to use all of its degrees of freedom. So instead of just dumbly taking images, it can play around with the focus and illumination to try to get a better idea of what’s on the slide, just like a human would.

Roarke Horstmeyer, Assistant Professor of Biomedical Engineering, Duke University