Jan 8 2021

Headed by the Swinburne University of Technology, an international research team has now revealed the world’s fastest and most robust optical neuromorphic processor meant for artificial intelligence (AI).

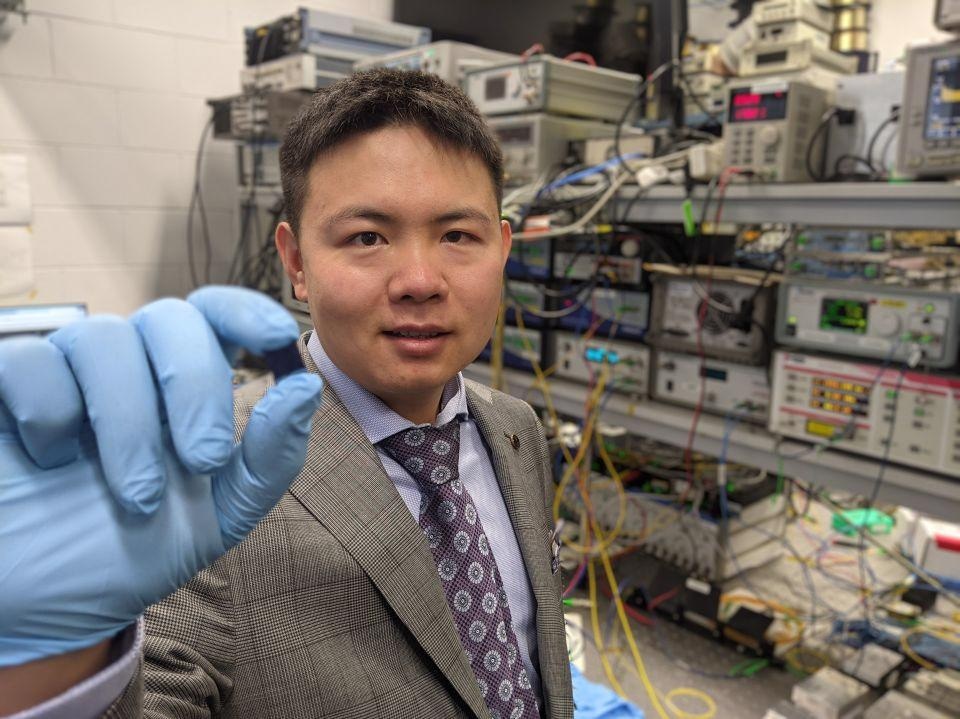

Dr Xingyuan (Mike) Xu with the integrated optical microcomb chip, which forms the core part of the optical neuromorphic processor. Image Credit: Swinburne University.

Dr Xingyuan (Mike) Xu with the integrated optical microcomb chip, which forms the core part of the optical neuromorphic processor. Image Credit: Swinburne University.

The new processor works faster than 10 trillion operations each second (TeraOPs/second) and can process ultra-large-scale data.

Published in the leading journal Nature, this latest innovation represents a major step forward for neuromorphic processing and neural networks in general.

Artificial neural networks are a crucial form of AI and they can 'learn' and execute difficult operations. As such, they are widely used in natural language processing, computer vision, speech translation, facial recognition, medical diagnosis, playing strategy games and in many different areas.

Artificial neural networks were mainly inspired by the biological structure of the visual cortex system in the brain. They can acquire crucial features of raw data to estimate behaviors and properties with unparalleled simplicity and precision.

The research team, headed by Professor David Moss from the Swinburne University of Technology, Dr Xingyuan (Mike) Xu from the Swinburne University of Technology and Monash University, and Distinguished Professor Arnan Mitchell from RMIT University, achieved an incredible feat in optical neural networks—that is, they considerably accelerated the processing power and computing speed of these networks.

The researchers showed an optical neuromorphic processor that operates over 1000 times faster when compared to any previously designed processor, and added to this, the system processes record-sized ultra-large-scale images—sufficient to accomplish complete facial image recognition, something that was impossible to achieve by other optical processors.

"This breakthrough was achieved with ‘optical micro-combs,’ as was our world-record internet data speed reported in May 2020."

David Moss, Professor and Director, Optical Sciences Centre, Swinburne University of Technology

Although next-generation electronic processors, like the Google TPU, can work beyond 100 TeraOPs/second, this is performed with an unlimited number of parallel processors. On the other hand, the optical system shown by the researchers employs only one processor and was accomplished through a novel method of concurrently interleaving the data in wavelength, time and spatial dimensions via a built-in micro-comb source.

Micro-combs are comparatively novel devices that behave similar to a rainbow composed of scores of high-quality infrared lasers on just a single chip. They are relatively faster, cheaper, smaller and lighter when compared to other optical sources.

"In the 10 years since I co-invented them, integrated micro-comb chips have become enormously important and it is truly exciting to see them enabling these huge advances in information communication and processing. Micro-combs offer enormous promise for us to meet the world’s insatiable need for information."

David Moss, Professor and Director, Optical Sciences Centre, Swinburne University of Technology

According to Dr Xu, Swinburne alum and also a postdoctoral fellow with the Electrical and Computer Systems Engineering Department at Monash University, “This processor can serve as a universal ultrahigh bandwidth front end for any neuromorphic hardware—optical or electronic based—bringing massive-data machine learning for real-time ultra-high bandwidth data within reach.”

“We’re currently getting a sneak-peak of how the processors of the future will look. It’s really showing us how dramatically we can scale the power of our processors through the innovative use of microcombs,” explained Dr Xu, who is also the co-lead author of the study.

"This technology is applicable to all forms of processing and communications—it will have a huge impact. Long term we hope to realise fully integrated systems on a chip, greatly reducing cost and energy consumption."

Arnan Mitchell, Distinguished Professor, RMIT University

“Convolutional neural networks have been central to the artificial intelligence revolution, but existing silicon technology increasingly presents a bottleneck in processing speed and energy efficiency,” stated Professor Damien Hicks, a key supporter of the team, from the Swinburne University of Technology and the Walter and Elizabeth Hall Institute.

“This breakthrough shows how a new optical technology makes such networks faster and more efficient and is a profound demonstration of the benefits of cross-disciplinary thinking, in having the inspiration and courage to take an idea from one field and using it to solve a fundamental problem in another,” Professor Hicks concluded.

Journal Reference:

Xu, X., et al. (2021) 11 TOPS photonic convolutional accelerator for optical neural networks. Nature. doi.org/10.1038/s41586-020-03063-0.