Jul 30 2019

David Lindell, a graduate student in electrical engineering at Stanford University, wore a high visibility tracksuit and began to work, stretching, hopping, and walking across an empty room. Through a camera pointed away from Lindell—at what seemed to be a blank wall—his co-workers could observe all his actions.

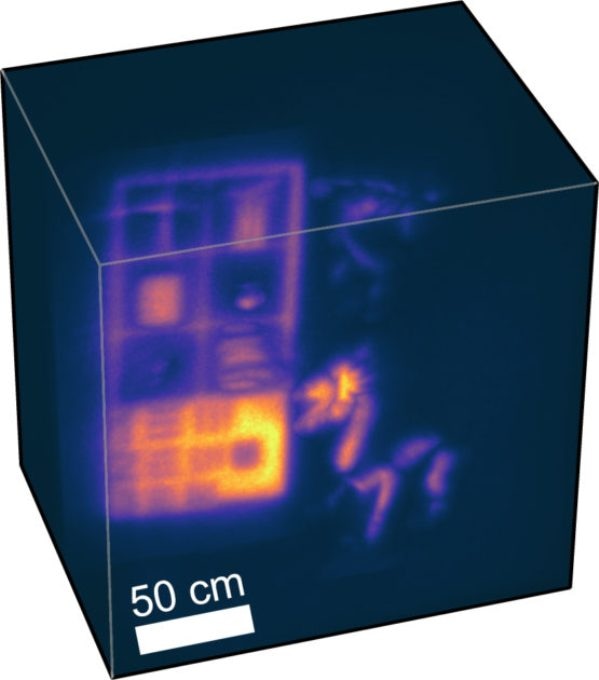

Objects—including books, a stuffed animal, and a disco ball—in and around a bookshelf tested the system’s versatility in capturing light from different surfaces in a large-scale scene. (Image credit: David Lindell)

Objects—including books, a stuffed animal, and a disco ball—in and around a bookshelf tested the system’s versatility in capturing light from different surfaces in a large-scale scene. (Image credit: David Lindell)

This was possible because, concealed to the human eye, a high-powered laser was scanning him and the single particles of light he reflected onto the walls around him were captured and recreated by the camera’s modern sensors and processing algorithm.

People talk about building a camera that can see as well as humans for applications such as autonomous cars and robots, but we want to build systems that go well beyond that. We want to see things in 3D, around corners and beyond the visible light spectrum.

Gordon Wetzstein, Assistant Professor of Electrical Engineering, Stanford University

The camera system Lindell tested, which the scientists are showcasing at the SIGGRAPH 2019 conference on August 1st, builds upon former around-the-corner cameras this team built. It is able to trap more light from a greater range of surfaces, see wider and farther away, and is quick enough to track out-of-sight movement—such as Lindell’s calisthenics—for the first time.

The scientists expect superhuman vision systems may eventually help autonomous cars and robots work even more safely than they would with human direction.

Practicality and seismology

Ensuring that their system is practical was a high priority for the researchers. The hardware they selected, the scanning and image processing speeds, and the imaging style are already being used commonly in autonomous car vision systems.

Earlier systems for observing scenes outside a camera’s line of vision depended on objects that reflect light either strongly or evenly. However, real-world objects, such as shiny cars, do not fall within these categories, so this system can deal with light bouncing off a variety of surfaces, including books, disco balls, and intricately textured statues.

Paramount to their progress was a laser 10,000 times more powerful than what they were using a year ago. The laser scans a wall opposite the scene of interest and that light bounces off the wall, hits the objects in the scene, bounces back to the wall and to the camera sensors.

By the time the laser light gets to the camera just specks remain, but the sensor captures all of it, transmitting it to a very efficient algorithm, also developed by this team, that unravels these echoes of light to decode the concealed tableau.

“When you’re watching the laser scanning it out, you don’t see anything,” described Lindell. “With this hardware, we can basically slow down time and reveal these tracks of light. It almost looks like magic.”

The system is designed to scan at four frames per second. It can rebuild a scene at speeds of 60 frames per second on a computer with a graphics processing unit, which improves graphics processing capabilities.

To progress their algorithm, the team explored other fields for inspiration. The scientists were especially interested in seismic imaging systems—which bounce sound waves off underground layers of Earth to discover what is underneath the surface—and rearranged their algorithm to similarly deduce bouncing light as waves originating from the concealed objects.

The result was the same high-speed and low memory usage with enhancements in their abilities to see large scenes consisting of different materials.

“There are many ideas being used in other spaces—seismology, imaging with satellites, synthetic aperture radar—that are applicable to looking around corners,” said Matthew O’Toole, an assistant professor at Carnegie Mellon University who was earlier a postdoctoral fellow in Wetzstein’s lab. “We’re trying to take a little bit from these fields and we’ll hopefully be able to give something back to them at some point.”

Humble steps

The ability to see real-time movement from otherwise invisible light bounced around a corner was an exciting moment for this team but a real-world system for autonomous robots or cars will require additional improvements.

It’s very humble steps. The movement still looks low-resolution and it’s not super-fast but compared to the state-of-the-art last year it is a significant improvement. We were blown away the first time we saw these results because we’ve captured data that nobody’s seen before.

Gordon Wetzstein, Assistant Professor of Electrical Engineering, Stanford University

The researchers are keen to transition toward testing their system on autonomous research cars, while exploring other probable applications, such as medical imaging, that can see through tissues. Among other enhancements to resolution and speed, they will also work to ensure their system is even more flexible to address difficult visual conditions that drivers run into, such as rain, fog, snow, and sandstorms.

Wave-Based Non-Line-of-Sight Imaging using Fast f–k Migration | SIGGRAPH 2019