Jul 6 2015

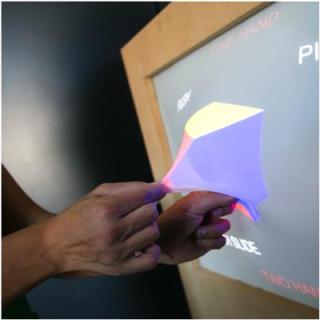

Exciting new technologies, which allow users to change the shape of displays with their hands, promise to revolutionise the way we interact with smartphones, laptops and computers. Imagine pulling objects and data out of the screen and playing with these in mid-air.

© GHOST

© GHOST

Today we live in a world of flat-screen displays we use all day – whether it’s the computer in the office, a smartphone on the train home, the TV or iPad on the couch in the evening. The world we live in is not flat, though; it’s made of hills and valleys, people and objects. Imagine if we could use our fingertips to manipulate the display and drag features out of it into our 3D world.

Such a vision led to the launch in January 2013 of GHOST (Generic, Highly-Organic Shape-Changing Interfaces), an EU-supported research project designed to tap humans’ ability to reason about and manipulate physical objects through the interfaces of computers and mobile devices.

‘This will have all sorts of implications for the future, from everyday interaction with mobile phones to learning with computers and design work,’ explained GHOST coordinator Professor Kasper Hornbæk of the University of Copenhagen. ‘It’s not only about deforming the shape of the screen, but also the digital object you want to manipulate, maybe even in mid-air. Through ultrasound levitation technology, for example, we can project the display out of the flat screen. And thanks to deformable screens we can plunge our fingers into it.’

Shape-changing displays you can touch and feel

This breakthrough in user interaction with technology allows us to handle objects, and even data, in a completely new way. A surgeon, for instance, will be able to work on a virtual brain physically, with the full tactile experience, before performing a real-life operation. Designers and artists using physical proxies such as clay can mould and remould objects and store them in the computer as they work. GHOST researchers are also working with deformable interfaces such as pads and sponges for musicians to flex to control timbre, speed and other parameters in electronic music.

Indeed, GHOST has produced an assembly line of prototypes to showcase shape-changing applications. ‘Emerge’ is one which allows data in bar charts to be pulled out of the screen by fingertips. The information, whether it’s election results or rainfall patterns, can then be re-ordered and broken down by column, row or individually, in order to visualise it better. The researchers have also been working with ‘morphees’, flexible mobile devices with lycra or alloy displays which bend and stretch according to use. These can change shape automatically to form screens to shield your fingers when you type in a pincode, for example, or to move the display to the twists and turns of a game. And such devices can be enlarged in the hand to examine data closer and shrunk again for storing away in a case or pocket.

Tactile technology reaching the market

One of the GHOST partners, the University of Bristol, has spun off a startup, now employing 12 people, called UltraHaptics, to develop technology being studied in GHOST that uses ultrasound to create feeling in mid-air. The company has attracted seed investment in the UK and further funding from the Horizon 2020 programme.

‘GHOST has made a lot of progress simply by bringing the partners together and allowing us to share our discoveries,’ commented Prof Hornbæk. ‘Displays which change shape as you are using them are probably only five years off now. If you want your smartphone to project the landscape of a terrain 20 or 30 cm out of the display, that’s a little further off - but we’re working on it!’

GHOST, which finishes at the end of this year, involves four partners in the United Kingdom, Netherlands and Denmark, and receives EUR 1.93 million from the EU’s Future and Emerging Technologies programme.