Apr 29 2013

EPFL has conceived "holographic showcases" as part of its quest on the museum experiences of the future. They are presented for the first time during the exhibition "Le lecteur à l'oeuvre" (the reader at work) taking place at the Martin Bodmer foundation (Cologny / GE) from 27 April to 25 August.

© 2013 EPFL

© 2013 EPFL

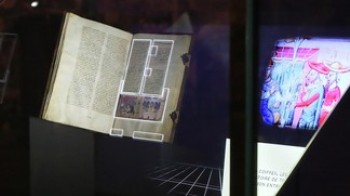

Come to the window. Have a look at at the beautiful illuminations that enhance this 12th century manuscript, secured in its glass case. Suddenly, as if by magic, its margins become alive and new pieces of information seem to be coming out of the page, moving away from the text, adding a new dimension to it. Welcome to a new era of museum experiences, where traditional contemplation is combined with contents on unlimited possibilities.

The first "holographic showcases" developed at EPFL will be presented in Cologny (GE) from 27 April to 25 August as part of the exhibition "Le lecteur à l'oeuvre" (the reader at work) at the Martin Bodmer foundation. "We are trying to create a new kind of museum language that is able to combine in a single space the museum experience together with its explanation, said Frédéric Kaplan, digital humanities professor at EPFL. Each showcase contains a 3D camera that allows us to analyze each visitor's behavior and her/his reactions to the changes in the windows' contents. The results will be published once the exhibition is over ".

"Self-generated" films

EPFL’s involvement in "Le lecteur à l'oeuvre", together with the addition of innovative technologies to the display of older works, brings a new dimension to the experience of reading – which is the process explored by the Bodmer Foundation throughout the exhibition. As a supplement to the holographic showcases, the Digital Humanities Laboratory has also developed a software application that can automatically generate a documentary film based on a selection of page reproductions and explanatory texts. "You just need to write a comment - for example a manuscript’s story - and associate it to the image of some of its pages. Then, the software is able to create a video sequence, automatically divided into chapters, in which the user can navigate in a particularly intuitive manner", explained Frédéric Kaplan. These sequences can also be watched online.

The documentary’s text is read by a synthetic voice. "In this way, each video can be immediately adapted and updated, e.g. in order to add new information to it." Thanks to it and despites a still monotonous reading, this is neither more nor less than an expression of interactivity "Wikipedia style" on a video media, which until today was not possible due to technical constraints.

The audience visiting the exhibition in Cologny will have the privilege of being the first to test these new types of documentaries, with tablets made available to them. "These are just two possibilities among others that we are exploring in our quest for new museum experiences," concluded Frédéric Kaplan making a reference to the Venice Time Machine project and to the future cultural and artistic pavilion whose construction will soon start at EPFL’s campus.