The human eye is in constant involuntary movement even when it is fixating on a target. When involuntary eye movement goes to the extreme in amplitude and angular speed, it is a medical condition referred to as nystagmus. Nystagmus is most commonly caused by a neurological problem present at birth or develops in early childhood. Acquired nystagmus, which occurs later in life, can be the symptom of another condition or disease.

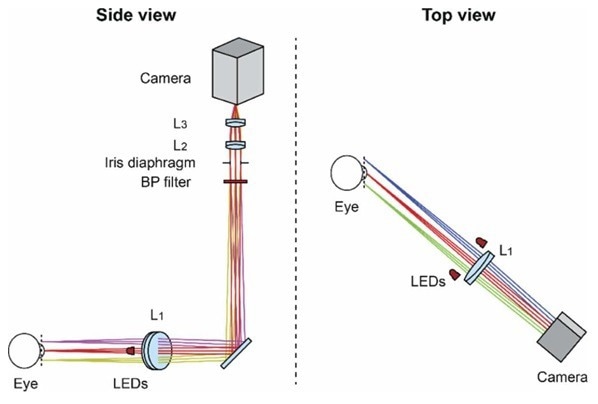

Pupil tracker optical setup showing Mikrotron camera, interferometric band-pass filter, and achromatic doublets (L1 to L3 ). Camera is tilted relative to the optical axis to compensate for the 45° object plane tilt which facilitates integration with ophthalmoscopes or other devices. Image Credit: Stanford University

Pupil tracker optical setup showing Mikrotron camera, interferometric band-pass filter, and achromatic doublets (L1 to L3 ). Camera is tilted relative to the optical axis to compensate for the 45° object plane tilt which facilitates integration with ophthalmoscopes or other devices. Image Credit: Stanford University

Nystagmus presents a number of problems for doctors using ophthalmoscopy to diagnose and monitor eye diseases including glaucoma, diabetes, and high blood pressure, or in evaluating symptoms of retinal detachment. Nystagmus can introduce substantial blur in ophthalmoscope images and distortion in scanning ophthalmoscope images, making the detection of eye disease challenging. Blur and distortion are particularly problematic in adaptive optics ophthalmoscopy, due to its high magnification and small fields of view.

Researchers in the Department of Ophthalmology at Stanford University (Palo Alto, CA) have developed a low-latency monocular pupil tracker using hybrid FPGA-CPU computing. They found that by overlapping the image processing in a pixel stream downloaded from a high-resolution camera, as opposed to the more conventional approach in which images are fully downloaded before processing starts, they could reduce latency and therefore, image blur in ophthalmoscope images. High precision and low latency make their device suitable for retinal imaging, retinal functional testing, retinal laser treatment, and refractive surgery that require real-time eye movement compensation.

The pupil tracker was built with off-the-shelf components with the aim being at improving both its performance and cost. It consists of an optical system with infrared illumination that relays the pupil of the eye onto a Mikroton CMOS camera connected to an FPGA in a computer with a powerful CPU. Two 940 nm light-emitting diodes are positioned to the left and right of the lens closest to the eye. The use of two LEDs, rather than one, spreads the retinal irradiance across two areas with their centers separated by approximately 25° of visual angle, providing better light safety than using a single LED.

Specifically, the pupil tracker was evaluated with a Mikroton EoSens 3CL camera with extended-full configuration CameraLink interfaces when capturing 8-bit depth images to achieve the maximum download data rate that this interface allows. The compact EoSens 3CL camera's maximum resolution is 1696 × 1710 pixels at a frame rate of 285 fps. The integrated and adjustable region of interest function enables operation at 628 fps with 1280 × 1024-pixel resolution, 893 fps at 1280 × 720, and 816 fps at 1000 × 1000. It achieves stepless adjustable frame rates of up to 285,000 fps at reduced resolution.

Three optical setups were used in testing with an approximate 18 mm square field of view tilted 45° with respect to the optical axis, and correspondingly tilted image plane. The third experimental approach used the Mikrotron setup to capture 210×284 pixel images with 0.18 ms exposure at 5400 frames/s. Raw pixel values of the camera were downloaded to a reconfigurable frame grabber featuring a Kintex-7 325T FPGA that was custom programmed using the LabVIEW FPGA module and the Vivado Design Suite. The frame grabber was installed in a PCIe slot of a computer with an Intel i7-6850K CPU and an Nvidia GeForce GTX 1050 discrete GPU. Raw images underwent background subtraction, field-flattening, 1-dimensional low-pass filtering, thresholding, and robust pupil edge detection on an FPGA pixel stream, followed by least-squares fitting of the pupil edge pixel coordinates to an ellipse in the CPU.

Pupil tracking was successfully demonstrated in a normal fixating subject at 575, 1250, and 5400 frames per second. According to the study, the approach seems well suited for tracking the pupil with precision comparable to or better than that of current pupil trackers. High precision and low latency make the device suitable for applications that require real-time eye movement compensation such as retinal imaging, retinal functional testing, retinal laser treatment, and refractive surgery.

The project was funded by Research to Prevent Blindness and National Eye Institute.