Regular image sensors, the same as the billion or so already fixed into almost all smartphones currently in use, capture color and light intensity. Depending on common, mass-produced sensor technology—called CMOS—these cameras have become smaller and more robust every year and at present deliver tens-of-megapixels resolution.

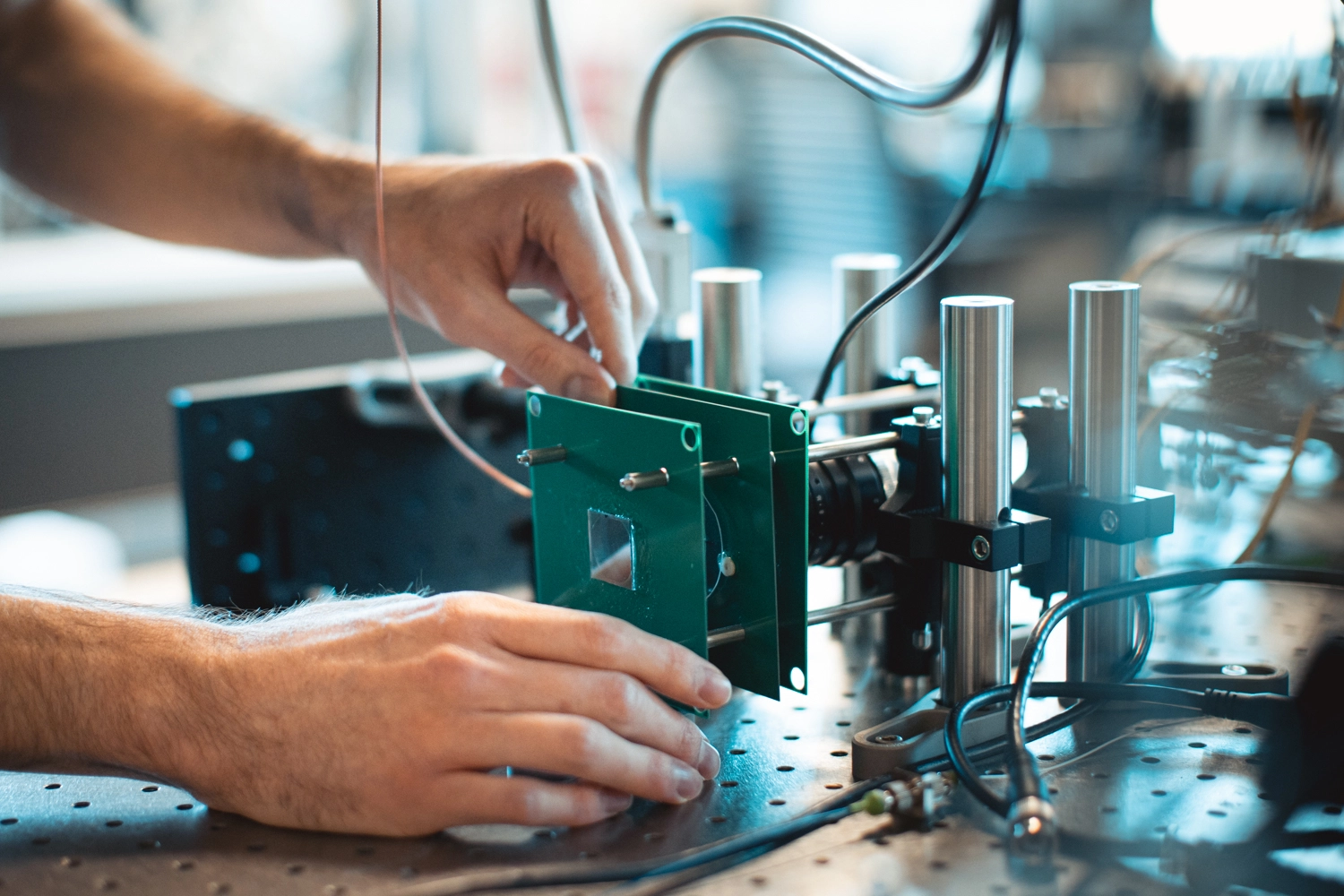

The lab-based prototype lidar system that the research team built, successfully captured megapixel-resolution depth maps using a commercially available digital camera. Image Credit: Andrew Brodhead

The lab-based prototype lidar system that the research team built, successfully captured megapixel-resolution depth maps using a commercially available digital camera. Image Credit: Andrew Brodhead

However, they are still seen in just two dimensions, recording images that are flat, similar to a drawing.

Stanford University researchers have formulated a new method that enables regular image sensors to view light in three dimensions. These standard cameras could, in the near future, be used to assess the distance to objects.

The engineering possibilities are huge. Assessing the distance between objects with light is at present only possible with dedicated and costly light detection and ranging (commonly known as lidar) systems. If one has seen a self-driving car, it can be spotted right off by the hunchback of technology placed on the roof. The majority of that gear is the vehicle’s lidar crash-avoidance platform, which utilizes lasers to establish distances between objects.

Lidar is similar to radar, but with light rather than radio waves. By focusing a laser on objects and computing the light that rebounds, it can convey the distance of an object, the speed the object is traveling, whether the object is moving closer or farther away and, most importantly, it can measure whether the paths of two moving objects will crisscross in the near future.

Existing lidar systems are big and bulky, but someday, if you want lidar capabilities in millions of autonomous drones or in lightweight robotic vehicles, you’re going to want them to be very small, very energy efficient, and offering high performance.

Okan Atalar, Study First Author and Doctoral Candidate in Electrical Engineering, Stanford University

The study, appearing in the journal Nature Communications, introduces this small, energy-efficient instrument that can be used for lidar.

For the team, this progress delivers two interesting opportunities. First, it could allow megapixel-resolution lidar—a threshold currently not possible. Higher resolution would enable lidar to detect targets at a greater range.

A self-driving car, for instance, might be able to differentiate a cyclist from a walker from a great distance—sooner, than the human eye—and enable the car to prevent an accident without difficulty. Second, any image sensor currently available, including the billions in smartphones at present, could capture intense 3D images with very less hardware accompaniments.

Changing How Machines See

One method of incorporating 3D imaging into regular sensors is accomplished by fitting a light source (effortlessly done) and a modulator (not as effortlessly done) that switches the light on and off very rapidly, millions of times per second.

By computing the differences in light, engineers can measure distance. Current modulators can do that too, but they need comparatively large quantities of power. So massive, in fact, that it renders them completely impractical for daily use.

The solution that the Stanford researchers, a partnership between the ArbabianLab and Laboratory for Integrated Nano-Quantum Systems (LINQS), devised depends on an occurrence called acoustic resonance. The team constructed a basic acoustic modulator with a thin wafer of lithium niobate—a transparent crystal that is very desirable for its acoustic, electrical and optical properties—applied with two transparent electrodes.

Analytically, lithium niobate is piezoelectric. That is when electricity is added via the electrodes, the shape of the crystal lattice at the core of its atomic structure changes. It vibrates at extremely high, extremely predictable and extremely controllable frequencies.

Furthermore, when it vibrates, lithium niobate robustly modulates light—with the incorporation of a few polarizers, this new modulator efficiently switches the light on and off several million times a second.

What’s more, the geometry of the wafers and the electrodes defines the frequency of light modulation, so we can fine-tune the frequency. Change the geometry and you change the frequency of modulation.

Okan Atalar, Study First Author and Doctoral Candidate in Electrical Engineering, Stanford University

In scientific terms, the piezoelectric effect is forming an acoustic wave via the crystal that rotates the polarization of light in a preferred, tunable and practical way. It is this core technical departure that supported the success of the team.

Then, a polarizing filter is securely positioned after the modulator that changes this rotation into intensity modulation—causing the light to become darker and brighter—efficiently switching the light on and off millions of times per second.

While there are other ways to turn the light on and off, this acoustic approach is preferable because it is extremely energy efficient.

Okan Atalar, Study First Author and Doctoral Candidate in Electrical Engineering, Stanford University

Practical Outcomes

The modulator’s incomparable design is basic and can be incorporated into a planned system that uses standard cameras, similar to those seen in ordinary cellphones and digital SLRs.

Atalar and advisor Amin Arbabian, associate professor of electrical engineering and the project’s senior author, believe it could become the foundation for a new kind of compact, cost-effective, energy-efficient lidar—“standard CMOS lidar,” as they label it—that could be added into extraterrestrial rovers, drones and other applications.

The effect of the projected modulator is huge; it has the capacity to incorporate the absent 3D dimension to any image sensor, they report. To demonstrate this, the engineers constructed a model lidar system on a lab bench that used a basic digital camera as a receptor. The team reports that their model recorded megapixel-resolution depth maps while necessitating small quantities of power to run the optical modulator.

With additional upgrades, Atalar states the team has since minimized the energy consumption by nearly 10 times the already-low threshold described in the research article, and they trust several-hundred-times-greater energy decrease is also possible. If that transpires, a future of small-scale lidar with common image sensors—and 3D smartphone cameras—could become an actuality.

The other Stanford researchers are Amir H. Safavi-Naeini, associate professor of applied physics, and postdoctoral fellow Raphael Van Laer. This study was partly funded by Stanford SystemX Alliance, the National Science Foundation, and the Office of Naval Research.

Journal Reference:

Atalar, O., et al. (2022) Longitudinal piezoelectric resonant photoelastic modulator for efficient intensity modulation at megahertz frequencies. Nature Communications. doi.org/10.1038/s41467-022-29204-9.