Sep 30 2016

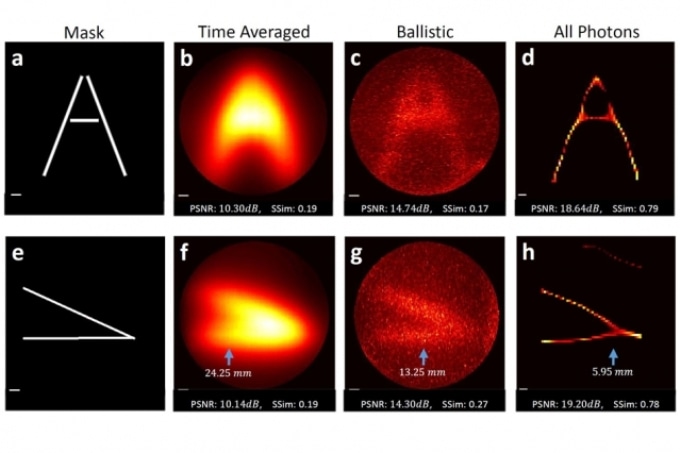

In experiments, the researchers fired a laser beam through a “mask” — a thick sheet of plastic with slits cut through it in a certain configuration, such as the letter A — and then through a 1.5-centimeter “tissue phantom,” a slab of material designed to mimic the optical properties of human tissue for purposes of calibrating imaging systems. (Image courtesy of the researchers)

In experiments, the researchers fired a laser beam through a “mask” — a thick sheet of plastic with slits cut through it in a certain configuration, such as the letter A — and then through a 1.5-centimeter “tissue phantom,” a slab of material designed to mimic the optical properties of human tissue for purposes of calibrating imaging systems. (Image courtesy of the researchers)

An MIT research team have developed a method to retrieve visual information from light that has dispersed due to interactions with the environment - such as passing via human tissue.

The method could lead to medical-imaging systems that use visible light, which provides more information than ultrasound waves or X-rays, or to computer vision systems that operate in drizzle or fog. The development of such vision systems has been a huge impediment to self-driving cars.

The researchers conducted several experiments where they fired a laser beam via a “mask” - a thick plastic sheet containing slits cut through it in a specific configuration, for instance, the letter A - and then via a 1.5 cm “tissue phantom,” a slab of material created with the ability to imitate the optical properties of human tissue for calibration of imaging system.

Light dispersed by the tissue phantom was then gathered by a high-speed camera, which could calculate the time of arrival of light. Based on that information, an accurate image of the pattern cut into the mask was reconstructed using the team’s algorithms.

The reason our eyes are sensitive only in this narrow part of the spectrum is because this is where light and matter interact most. This is why X-ray is able to go inside the body, because there is very little interaction. That’s why it can’t distinguish between different types of tissue, or see bleeding, or see oxygenated or deoxygenated blood.

Guy Satat, Graduate Student, MIT Media Lab

All Photons Imaging : MIT Media Lab, Camera Culture Group

An imaging algorithm from the MIT Media Lab's Camera Culture group compensates for the scattering of light. The advance could potentially be used to develop optical-wavelength medical imaging and autonomous vehicles. (Video Credit: cameraculturegroup/Youtube.com)

However, the potential applications of the imaging technique in automotive sensing may be a lot more convincing than those in medical imaging. There are several highly reliable experimental algorithms available to guide autonomous vehicles under good illumination, however they completely fail in drizzle or fog.

Here the computer vision systems misread the dispersed light as being reflected off of objects that were not really there. The new method has the potential to address that issue.

Satat’s coauthors on the new paper, published today in Scientific Reports, are three other members of the Media Lab’s Camera Culture group: Ramesh Raskar, the group’s leader, Satat’s thesis advisor, and an associate professor of media arts and sciences; Barmak Heshmat, a research scientist; and Dan Raviv, a postdoc.

Expanding Circles

Similar to several of the Camera Culture group’s projects, the new system depends on a pulsed laser that radiates ultrashort bursts of light, and a high-speed camera that can differentiate the arrival times of various groups of photons.

When a light burst makes contact with a scattering medium, such as a tissue phantom, some of the photons pass through untouched; some are faintly deflected from a straight path; and some bounce about within the medium for a moderately long time.

The first photons to reach the sensor have experienced the lowest dispersion; while the last photons to reach the sensor have experienced the highest.

Earlier methods tried to reconstruct images using just those first, non-dispersed photons. The MIT team’s method applies the whole optical signal, therefore it is referred to as all-photons imaging.

The data captured by the camera somewhat resembles a movie - a 2D image that keeps changing over time. To understand the way all-photons imaging functions, assume that light reaches the camera from only a single point in the visual field. The first photons to reach the camera pass through the dispersing medium unhindered: They arrive as just one single illuminated pixel in the movie’s first frame.

The next photons to reach the camera have experienced slightly more dispersion, so in the second frame of the movie, they look like a small circle centered on the single pixel from the first frame. As the frames progress, the circle widens in diameter, and eventually the final frame just displays a common, hazy light.

However, in practice the camera captured light from several points in the visual field, causing the widening circles to overlap. The function of the newly created algorithm is to decide where the photons that illuminate the pixels of the image originated.

Cascading Probabilities

The initial step is to establish how the total intensity of the image changes over time. This provides an idea regarding the amount of dispersion the light has undergone: If the intensity increases rapidly and tails off fast, then the light has not been dispersed much. If the intensity increases gradually and tails off little by little, it has dispersed a lot.

Based on that estimate, the algorithm analyzes each pixel of each succeeding frame and measures the probability that it meets any given point in the visual field. Next it returns to the first frame of movie and, with the aid of the probabilistic model it has just constructed, predicts how the subsequent frame of movie will look like.

It studies each successive frame, and compares its prediction to the real camera measurement and accordingly modifies its model. Finally, by using the final version of the model, it was able to deduce the pattern of light most probable to have generated the measurement sequence the camera created.

One constraint of the present version of the system is that the camera and the light emitter are on either sides of the scattering medium. This restricts its applicability for medical imaging. But Satat believes that fluorescent particles called fluorophores can be used as they can be injected into the bloodstream, plus they are already used in medical imaging, as a light source.

Moreover, fog disperses light lesser than human tissue does, so reflected light from laser pulses shot into the environment could be quite suitable for automotive sensing.

People have been using what is known as time gating, the idea that photons not only have intensity but also time-of-arrival information and that if you gate for a particular time of arrival you get photons with certain specific path lengths and therefore [come] from a certain specific depth in the object. This paper is taking that concept one level further and saying that even the photons that arrive at slightly different times contribute some spatial information. Looking through scattering media is a problem that’s of large consequence. There’s maybe one barrier that’s been crossed, but there are maybe three more barriers that need to be crossed before this becomes practical.

Ashok Veeraraghavan, Assistant Professor, Rice University