Reviewed by Mila PereraNov 1 2022

Self-driving cars need to observe what is around them to drive safely and avoid obstacles in the same way that human drivers do.

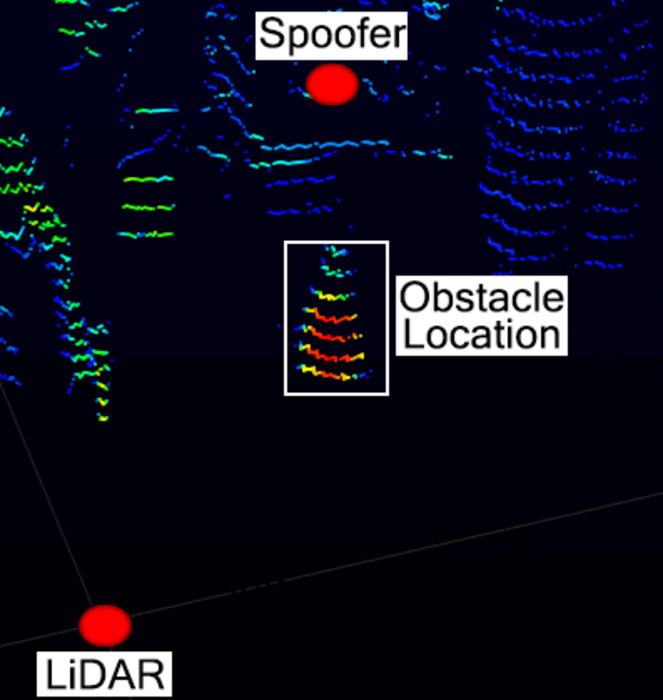

An animated GIF showing how the attack uses a laser to inject spoofed data points to the lidar sensor, which causes it to discard genuine data about an obstacle in front of the sensor. Image Credit: Sara Rampazzi/University of Florida

The most advanced independent vehicles generally employ lidar, a spinning radar-type tool that functions as the car’s eyes. Lidar offers non-interrupted data regarding the distance to objects so the car can decide what actions are safe.

These eyes, however, can be tricked.

The latest study shows that skillfully timed lasers shined at an upcoming lidar system can create a blind spot in front of the vehicle large enough to hide moving pedestrians and other obstacles. The deleted information makes the cars assume the road is safe to remain moving along, endangering everything that may be in the blind spot.

This is the first time lidar sensors are being tricked into deleting information about obstacles.

Researchers from the University of Florida, the University of Michigan, and the University of Electro-Communications in Japan revealed this vulnerability. The researchers also offer upgrades that can eliminate this weakness to safeguard people from malicious attacks.

The results are publicly available online and will be presented at the 2023 USENIX Security Symposium.

Lidar functions by releasing laser light and seizing the reflections to quantify distances, just like how the echolocation of a bat utilizes sound echoes. The attack forms fake reflections to scramble the sensor.

We mimic the lidar reflections with our laser to make the sensor discount other reflections that are coming in from genuine obstacles. The lidar is still receiving genuine data from the obstacle, but the data are automatically discarded because our fake reflections are the only one perceived by the sensor.

Sara Rampazzi, Study Lead Author and Professor, Computer and Information Science and Engineering, University of Florida

The scientists demonstrated the attack on moving vehicles and robots, with the attacker placed about 15 feet away on the side of the road. However, in theory, advanced equipment can achieve it from a greater distance. The technical requirement is basic, but the laser needs to be timed to the lidar sensor and moving vehicles must be followed to keep the laser pointing in the appropriate direction.

It’s primarily a matter of synchronization of the laser with the lidar device. The information you need is usually publicly available from the manufacturer.

S. Hrushikesh Bhupathiraj, Study Co-Lead Author and Doctoral Student, Rampazzi’s Lab, University of Florida

Using this technique, the researchers could delete information for moving pedestrians and static obstacles. They also established that the attack could track a slow-moving vehicle with real-world experiments using basic camera tracking equipment. In autonomous vehicle decision-making simulation, this data deletion made a car keep accelerating towards a pedestrian that it could no longer see rather than stopping.

This vulnerability can be addressed by the software that interprets the raw data or updates the lidar sensors. For instance, manufacturers could explain the software to search for the tell-tale signs of the tricked reflections included by the laser attack.

Revealing this liability allows us to build a more reliable system. In our paper, we demonstrate that previous defense strategies aren’t enough, and we propose modifications that should address this weakness.

Yulong Cao, Study Primary Author and Doctoral Student, University of Michigan