Simple and high-quality 3-D cameras can be integrated into handheld devices. With the launch of Microsoft’s Kinect, a device wherein games are controlled through the physical gestures of Xbox users, computer scientists started hacking it. Hence, there is a growing demand for the advancement of Kinect with more-accurate depth information, suitable for all lighting conditions, power-efficient, economic and compact.

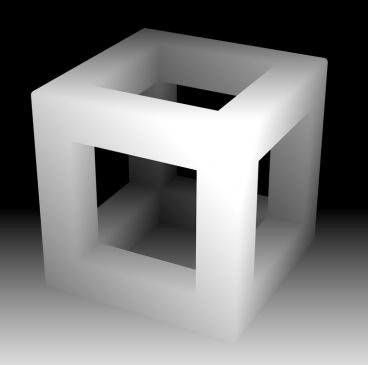

Depth-sensing cameras can produce 'depth maps' like this one, in which distances are depicted as shades on a gray-scale spectrum (lighter objects are closer, darker ones farther away).

Depth-sensing cameras can produce 'depth maps' like this one, in which distances are depicted as shades on a gray-scale spectrum (lighter objects are closer, darker ones farther away).

It should also facilitate incorporation into a cellphone at a low cost. This vision was initiated by Vivek Goyal, Esther and Harold E. Edgerton Associate Professor of Electrical Engineering and his team at MIT’s Research Lab of Electronics.

The MIT researchers’ system employs the ‘time of flight’ of light particles to gauge depth. In order to construct a ‘depth map’ of a scene, traditional time-of-flight systems employ either LIDAR for light detection and ranging, with a scanning laser beam to measure the time of return independently, or the entire scene is illuminated with laser pulses and the returned light is recorded through sensors.

In contrast, the MIT researchers’ system employs only a single light detector, usually a one-pixel camera. However, with complex mathematical analysis, the laser can be triggered for a specific number of times. This has been demonstrated by an approach where the light emitted by the laser travels through a sequence of randomly generated light patterns and dark squares representing an irregular checkerboard. In experiments, the researchers observed that the number of laser flashes or checkerboard patterns to construct a suitable depth map seem to be 5% of the number of pixels in the final image. LIDAR systems thus need to transmit separate laser pulse for every pixel. The parametric signal processing adds the crucial third dimension to the depth map, which assumes the entire surface in the scene to be flat planes.